Long-Term AI Memory

for Education and Embodied Intelligence

Simfero develops an ontology-driven memory architecture that enables AI systems — from educational assistants to autonomous robots — to learn continuously, retain skills and operate with explainable long-term context.

Validated in education • Extended to robotics and embodied AI • Optimized for edge devices

Ontology-Based AI Memory

Persistent, structured memory for AI systems that need to retain knowledge, skills and context over long periods of time.

✓ Knowledge and skills stored independently of model weights

✓ Ontological structure instead of flat embeddings

✓ Designed for explainability and auditability

Continuous Learning Engine

Enables AI systems to learn incrementally, preserve experience and build long-term learning trajectories.

✓ Incremental updates without full retraining

✓ Skill and progress tracking over time

✓ Suitable for both human learning and autonomous systems

Explainable Decision Memory

Transparent reasoning through structured memory and explicit knowledge representations.

✓ Auditable decision logic

✓ Clear links between knowledge, actions and outcomes

✓ Designed for ethical and trustworthy AI requirements

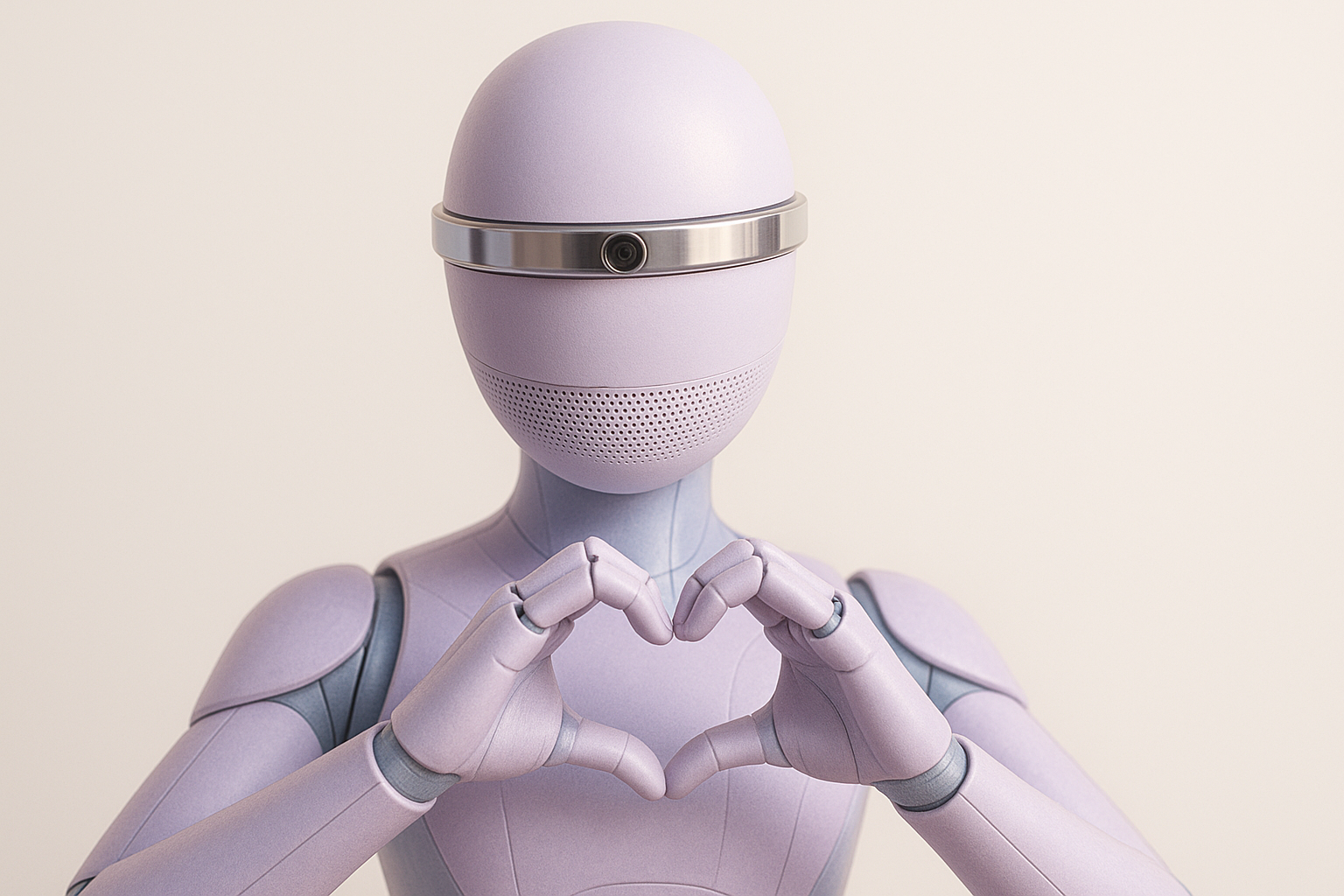

Edge & Embodied AI Ready

Optimized AI memory architecture deployable from cloud environments to edge and micro-devices.

✓ Resource-efficient memory usage

✓ Hybrid and off-chip memory support

✓ Applicable to robotics and autonomous systems

The Problems We Solve

Our recent projects

Key Challenges

❌ Modern AI systems struggle with long-term context and memory, relying on short-lived prompts instead of persistent knowledge.

❌ Continuous learning is difficult to achieve without structured representations of skills, progress and experience.

❌ Decision-making in AI systems often lacks explainability and auditability, limiting trust and regulatory readiness.

❌ Deploying AI on edge, embedded and autonomous systems is constrained by memory, compute and energy limitations.

Simfero Solution

✅ Ontology-based long-term AI memory enables persistent storage of knowledge, skills and contextual state beyond model weights.

✅ Continuous learning mechanisms allow AI systems to accumulate experience and adapt over time without full retraining.

✅ Structured memory provides explainable and auditable reasoning, supporting ethical and trustworthy AI requirements.

✅ Optimized memory architecture enables deployment from cloud environments to edge and micro-devices, including embodied systems.

The Impact

🚀 AI systems evolve over time, retaining skills and context instead of restarting from zero.

🚀 Education and robotics benefit from shared memory principles applied across human learning and autonomous intelligence.

🚀 Organizations gain transparent, controllable and regulation-ready AI architectures.

🚀 Europe strengthens its position in trustworthy, resource-efficient and deployable AI technologies.

Why Simfero

Vulputate gravida nibh egestas.

From Short-Term Prompts to Long-Term Intelligence

From Short-Term Prompts to Long-Term Intelligence

Simfero focuses on building the foundational layer required for AI systems to move beyond short-term interactions toward persistent, explainable and continuously evolving intelligence.

Instead of automating isolated tasks, Simfero develops an ontology-based AI memory architecture that allows systems to retain knowledge, track skills and reason over time. This approach supports both human learning environments and autonomous systems, including robotics, where long-term context and adaptability are critical.

✓ Ontology-based long-term memory independent of model weights

✓ Continuous learning and skill retention over extended periods

✓ Explainable and auditable reasoning by design

✓ Deployable across cloud, edge and embodied AI systems

Secure and Trustworthy AI by Design

Egestas et in facilisis viverra et interdum.

Security, privacy and trust are foundational requirements for long-term AI memory systems operating in both human-centric and autonomous environments.

Simfero is built with a privacy-first and ethics-by-design architecture, ensuring that AI systems using long-term memory remain transparent, controllable and aligned with European regulatory standards across education, robotics and edge deployments.

🔹 Key Principles

Privacy by Design

Memory architectures designed to minimize data exposure and support on-premise, edge and institution-controlled deployments.

GDPR & EU AI Act Readiness

Full control over stored data, explicit memory structures and compliance-oriented system design.

Explainable AI Memory

Ontology-based representations enable clear inspection of what the system knows, remembers and why decisions are made.

Human-in-the-Loop Control

Memory and decision processes are designed to support human oversight and intervention at all stages.

Bias Awareness and Mitigation

Structured knowledge representations allow explicit analysis, correction and governance of bias across learning and autonomous systems.

Vulputate gravida nibh egestas.

Build a Deployable AI Memory Infrastructure

Simfero provides a flexible deployment architecture for ontology-based AI memory systems, adaptable to different operational, regulatory and technical environments.

Cloud Deployment

- Designed for rapid experimentation, validation and scaling of AI memory systems in cloud environments.

- Quick deployment for research and validation use cases

- Immediate access to AI memory and reasoning capabilities

- No local infrastructure required

- Centralized updates of memory logic and ontology layers

Edge & Custom Deployment

- Designed for controlled, privacy-sensitive and resource-constrained environments, including autonomous and embodied systems.

- Full control over memory, data and execution environment

- On-premise, private cloud and edge-device deployments

- Optimized memory architectures for constrained hardware

- Integration with existing AI, robotics and institutional systems

The same core AI memory architecture is deployable across cloud, edge and embedded environments, enabling consistent long-term intelligence across domains.

From Short-Term Prompts to Long-Term Intelligence

From Short-Term Prompts to Long-Term Intelligence